Extending Kubernetes

Different ways to change the behavior of your Kubernetes cluster.

Kubernetes is highly configurable and extensible. As a result,

there is rarely a need to fork or submit patches to the Kubernetes

project code.

This guide describes the options for customizing a Kubernetes

cluster. It is aimed at cluster operators who want to

understand how to adapt their Kubernetes cluster to the needs of

their work environment. Developers who are prospective Platform Developers or Kubernetes Project Contributors will also find it

useful as an introduction to what extension points and patterns

exist, and their trade-offs and limitations.

Overview

Customization approaches can be broadly divided into configuration, which only involves changing flags, local configuration files, or API resources; and extensions, which involve running additional programs or services. This document is primarily about extensions.

Configuration

Configuration files and flags are documented in the Reference section of the online documentation, under each binary:

Flags and configuration files may not always be changeable in a hosted Kubernetes service or a distribution with managed installation. When they are changeable, they are usually only changeable by the cluster administrator. Also, they are subject to change in future Kubernetes versions, and setting them may require restarting processes. For those reasons, they should be used only when there are no other options.

Built-in Policy APIs, such as ResourceQuota, PodSecurityPolicies, NetworkPolicy and Role-based Access Control (RBAC), are built-in Kubernetes APIs. APIs are typically used with hosted Kubernetes services and with managed Kubernetes installations. They are declarative and use the same conventions as other Kubernetes resources like pods, so new cluster configuration can be repeatable and be managed the same way as applications. And, where they are stable, they enjoy a defined support policy like other Kubernetes APIs. For these reasons, they are preferred over configuration files and flags where suitable.

Extensions

Extensions are software components that extend and deeply integrate with Kubernetes.

They adapt it to support new types and new kinds of hardware.

Most cluster administrators will use a hosted or distribution

instance of Kubernetes. As a result, most Kubernetes users will not need to

install extensions and fewer will need to author new ones.

Extension Patterns

Kubernetes is designed to be automated by writing client programs. Any

program that reads and/or writes to the Kubernetes API can provide useful

automation. Automation can run on the cluster or off it. By following

the guidance in this doc you can write highly available and robust automation.

Automation generally works with any Kubernetes cluster, including hosted

clusters and managed installations.

There is a specific pattern for writing client programs that work well with

Kubernetes called the Controller pattern. Controllers typically read an

object's .spec, possibly do things, and then update the object's .status.

A controller is a client of Kubernetes. When Kubernetes is the client and

calls out to a remote service, it is called a Webhook. The remote service

is called a Webhook Backend. Like Controllers, Webhooks do add a point of

failure.

In the webhook model, Kubernetes makes a network request to a remote service.

In the Binary Plugin model, Kubernetes executes a binary (program).

Binary plugins are used by the kubelet (e.g.

Flex Volume Plugins

and Network Plugins)

and by kubectl.

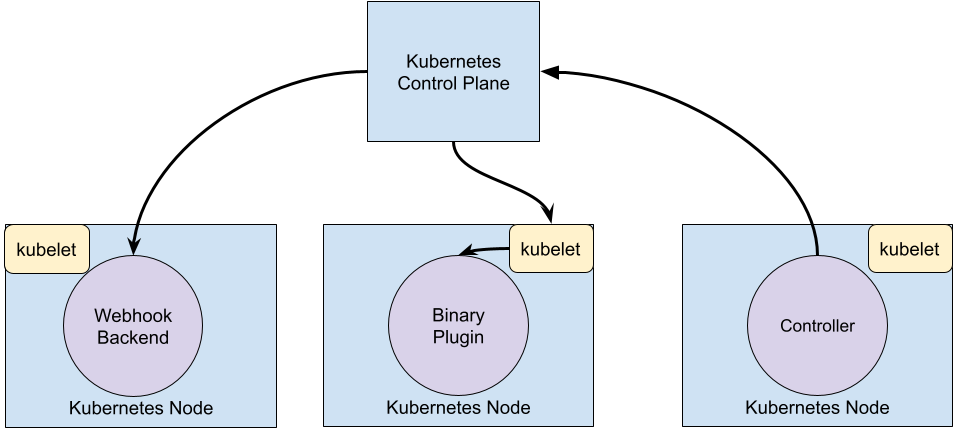

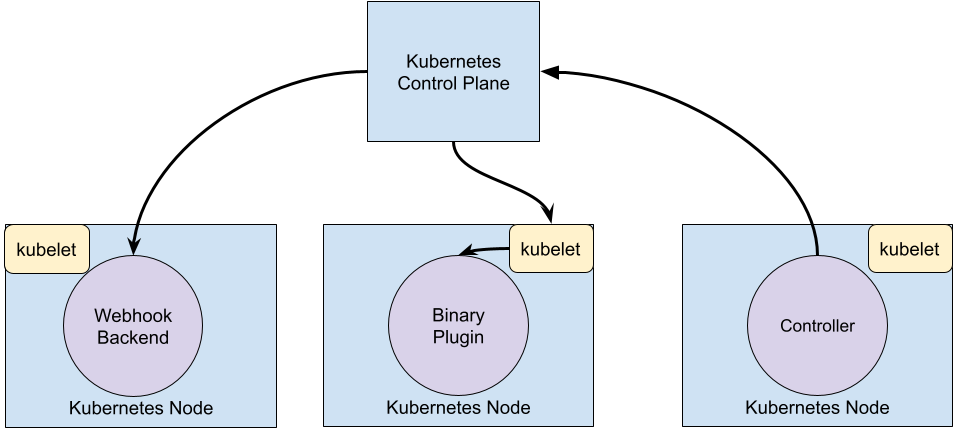

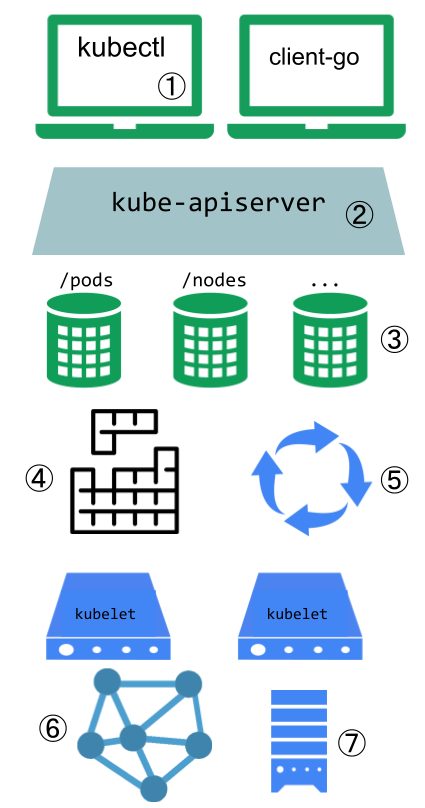

Below is a diagram showing how the extension points interact with the

Kubernetes control plane.

Extension Points

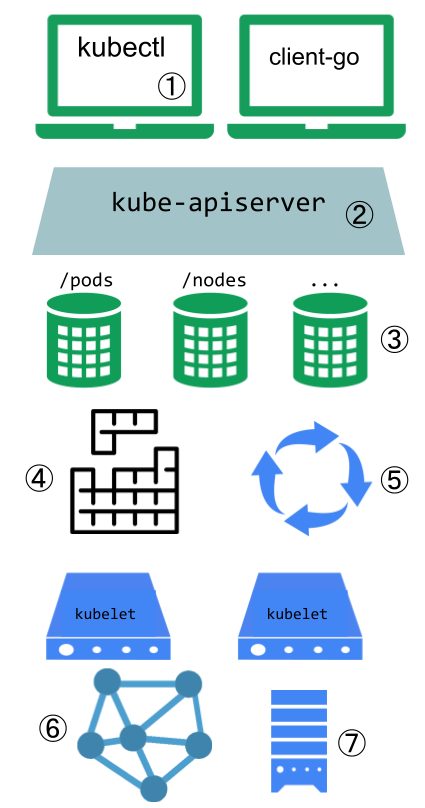

This diagram shows the extension points in a Kubernetes system.

- Users often interact with the Kubernetes API using

kubectl. Kubectl plugins extend the kubectl binary. They only affect the individual user's local environment, and so cannot enforce site-wide policies. - The apiserver handles all requests. Several types of extension points in the apiserver allow authenticating requests, or blocking them based on their content, editing content, and handling deletion. These are described in the API Access Extensions section.

- The apiserver serves various kinds of resources. Built-in resource kinds, like

pods, are defined by the Kubernetes project and can't be changed. You can also add resources that you define, or that other projects have defined, called Custom Resources, as explained in the Custom Resources section. Custom Resources are often used with API Access Extensions. - The Kubernetes scheduler decides which nodes to place pods on. There are several ways to extend scheduling. These are described in the Scheduler Extensions section.

- Much of the behavior of Kubernetes is implemented by programs called Controllers which are clients of the API-Server. Controllers are often used in conjunction with Custom Resources.

- The kubelet runs on servers, and helps pods appear like virtual servers with their own IPs on the cluster network. Network Plugins allow for different implementations of pod networking.

- The kubelet also mounts and unmounts volumes for containers. New types of storage can be supported via Storage Plugins.

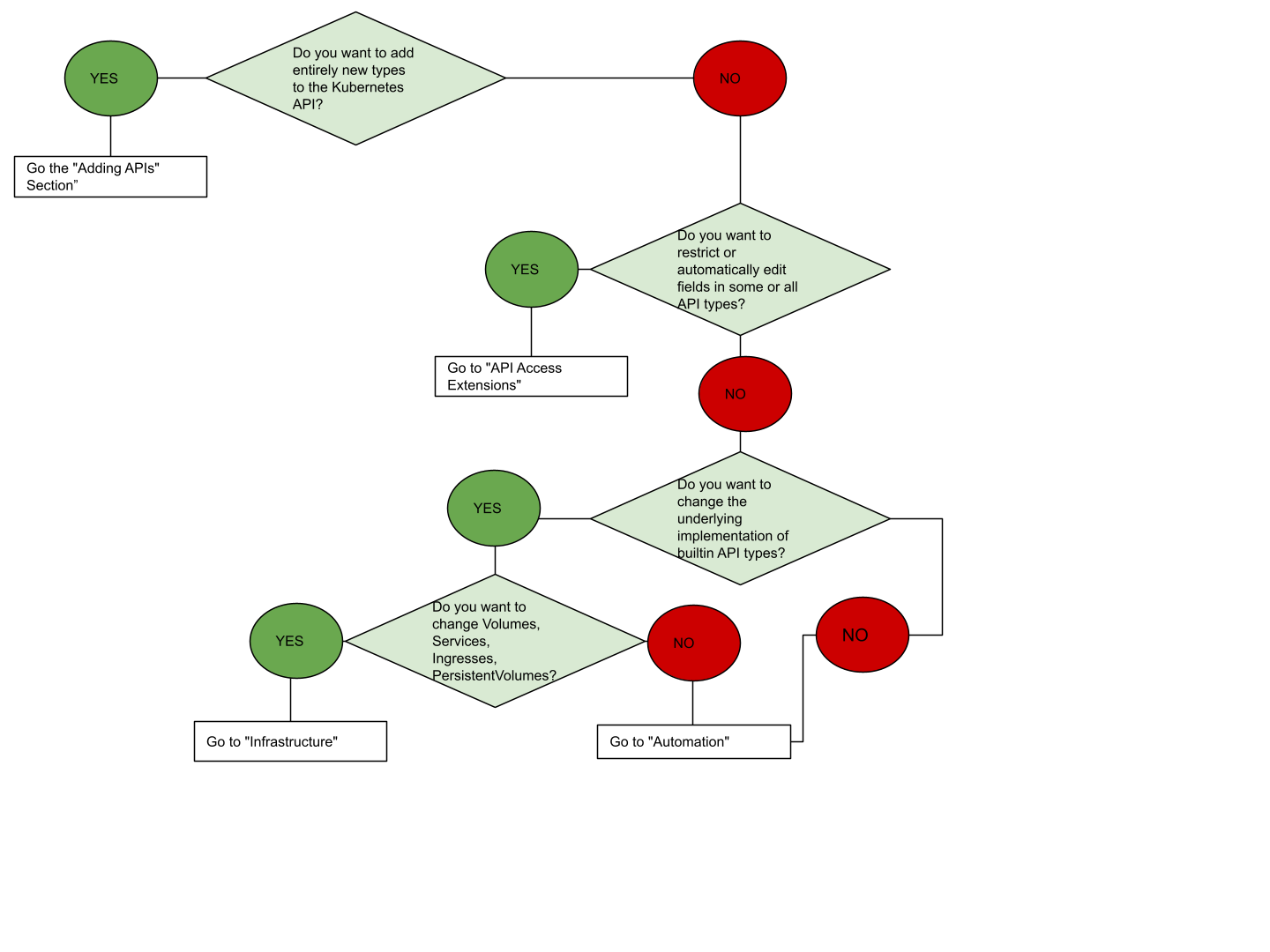

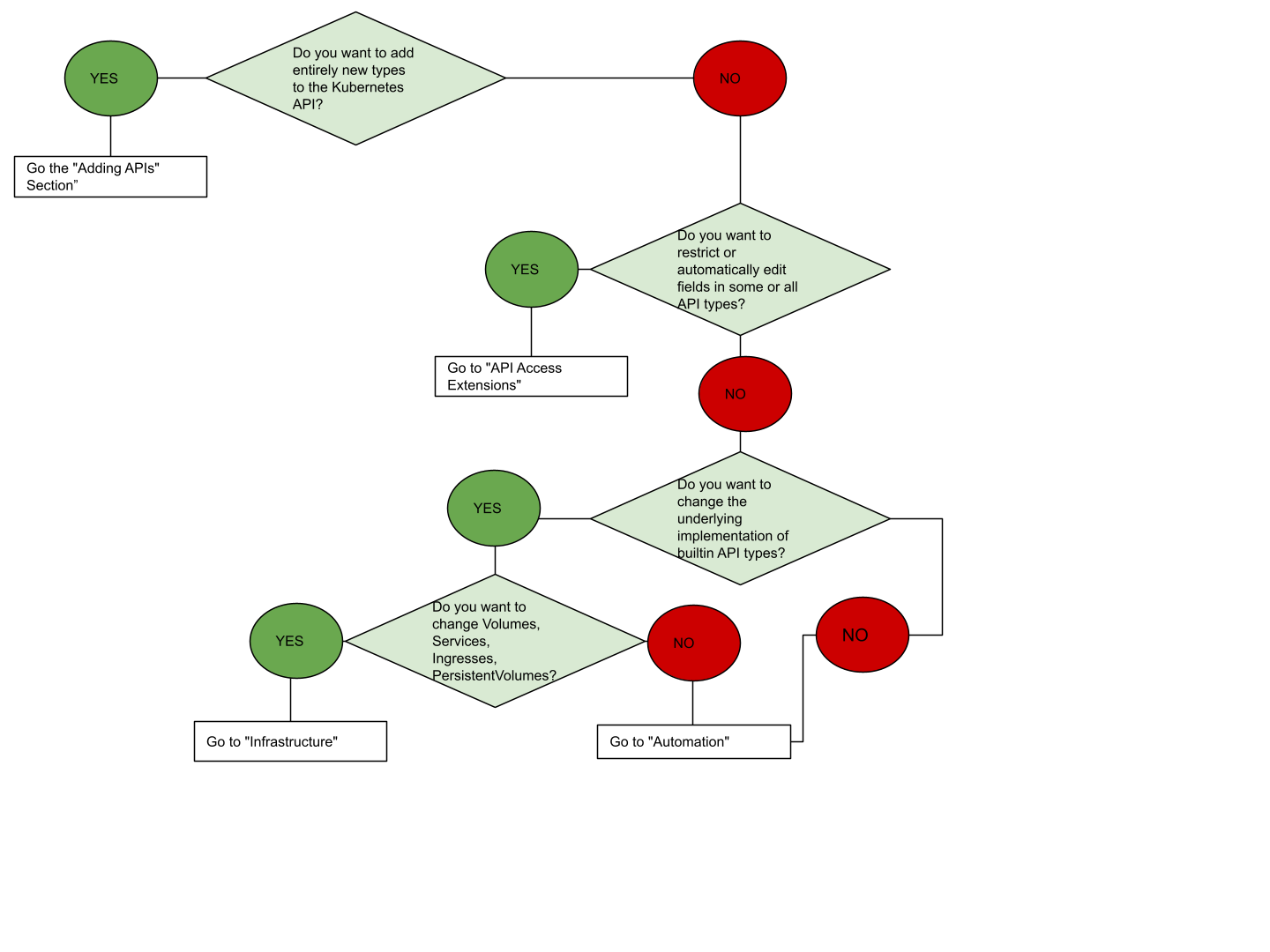

If you are unsure where to start, this flowchart can help. Note that some solutions may involve several types of extensions.

API Extensions

User-Defined Types

Consider adding a Custom Resource to Kubernetes if you want to define new controllers, application configuration objects or other declarative APIs, and to manage them using Kubernetes tools, such as kubectl.

Do not use a Custom Resource as data storage for application, user, or monitoring data.

For more about Custom Resources, see the Custom Resources concept guide.

Combining New APIs with Automation

The combination of a custom resource API and a control loop is called the Operator pattern. The Operator pattern is used to manage specific, usually stateful, applications. These custom APIs and control loops can also be used to control other resources, such as storage or policies.

Changing Built-in Resources

When you extend the Kubernetes API by adding custom resources, the added resources always fall into a new API Groups. You cannot replace or change existing API groups.

Adding an API does not directly let you affect the behavior of existing APIs (e.g. Pods), but API Access Extensions do.

API Access Extensions

When a request reaches the Kubernetes API Server, it is first Authenticated, then Authorized, then subject to various types of Admission Control. See Controlling Access to the Kubernetes API for more on this flow.

Each of these steps offers extension points.

Kubernetes has several built-in authentication methods that it supports. It can also sit behind an authenticating proxy, and it can send a token from an Authorization header to a remote service for verification (a webhook). All of these methods are covered in the Authentication documentation.

Authentication

Authentication maps headers or certificates in all requests to a username for the client making the request.

Kubernetes provides several built-in authentication methods, and an Authentication webhook method if those don't meet your needs.

Authorization

Authorization determines whether specific users can read, write, and do other operations on API resources. It works at the level of whole resources -- it doesn't discriminate based on arbitrary object fields. If the built-in authorization options don't meet your needs, and Authorization webhook allows calling out to user-provided code to make an authorization decision.

Dynamic Admission Control

After a request is authorized, if it is a write operation, it also goes through Admission Control steps. In addition to the built-in steps, there are several extensions:

- The Image Policy webhook restricts what images can be run in containers.

- To make arbitrary admission control decisions, a general Admission webhook can be used. Admission Webhooks can reject creations or updates.

Infrastructure Extensions

Storage Plugins

Flex Volumes allow users to mount volume types without built-in support by having the

Kubelet call a Binary Plugin to mount the volume.

Device Plugins

Device plugins allow a node to discover new Node resources (in addition to the

builtin ones like cpu and memory) via a

Device Plugin.

Network Plugins

Different networking fabrics can be supported via node-level

Network Plugins.

Scheduler Extensions

The scheduler is a special type of controller that watches pods, and assigns

pods to nodes. The default scheduler can be replaced entirely, while

continuing to use other Kubernetes components, or

multiple schedulers

can run at the same time.

This is a significant undertaking, and almost all Kubernetes users find they

do not need to modify the scheduler.

The scheduler also supports a

webhook

that permits a webhook backend (scheduler extension) to filter and prioritize

the nodes chosen for a pod.

What's next

1 - Extending your Kubernetes Cluster

Kubernetes is highly configurable and extensible. As a result,

there is rarely a need to fork or submit patches to the Kubernetes

project code.

This guide describes the options for customizing a Kubernetes cluster. It is

aimed at cluster operators

who want to understand how to adapt their

Kubernetes cluster to the needs of their work environment. Developers who are prospective

Platform Developers

or Kubernetes Project Contributors

will also find it useful as an introduction to what extension points and

patterns exist, and their trade-offs and limitations.

Overview

Customization approaches can be broadly divided into configuration, which only involves changing flags, local configuration files, or API resources; and extensions, which involve running additional programs or services. This document is primarily about extensions.

Configuration

Configuration files and flags are documented in the Reference section of the online documentation, under each binary:

Flags and configuration files may not always be changeable in a hosted Kubernetes service or a distribution with managed installation. When they are changeable, they are usually only changeable by the cluster administrator. Also, they are subject to change in future Kubernetes versions, and setting them may require restarting processes. For those reasons, they should be used only when there are no other options.

Built-in Policy APIs, such as ResourceQuota, PodSecurityPolicies, NetworkPolicy and Role-based Access Control (RBAC), are built-in Kubernetes APIs. APIs are typically used with hosted Kubernetes services and with managed Kubernetes installations. They are declarative and use the same conventions as other Kubernetes resources like pods, so new cluster configuration can be repeatable and be managed the same way as applications. And, where they are stable, they enjoy a defined support policy like other Kubernetes APIs. For these reasons, they are preferred over configuration files and flags where suitable.

Extensions

Extensions are software components that extend and deeply integrate with Kubernetes.

They adapt it to support new types and new kinds of hardware.

Most cluster administrators will use a hosted or distribution

instance of Kubernetes. As a result, most Kubernetes users will not need to

install extensions and fewer will need to author new ones.

Extension Patterns

Kubernetes is designed to be automated by writing client programs. Any

program that reads and/or writes to the Kubernetes API can provide useful

automation. Automation can run on the cluster or off it. By following

the guidance in this doc you can write highly available and robust automation.

Automation generally works with any Kubernetes cluster, including hosted

clusters and managed installations.

There is a specific pattern for writing client programs that work well with

Kubernetes called the Controller pattern. Controllers typically read an

object's .spec, possibly do things, and then update the object's .status.

A controller is a client of Kubernetes. When Kubernetes is the client and

calls out to a remote service, it is called a Webhook. The remote service

is called a Webhook Backend. Like Controllers, Webhooks do add a point of

failure.

In the webhook model, Kubernetes makes a network request to a remote service.

In the Binary Plugin model, Kubernetes executes a binary (program).

Binary plugins are used by the kubelet (e.g.

Flex Volume Plugins

and Network Plugins)

and by kubectl.

Below is a diagram showing how the extension points interact with the

Kubernetes control plane.

Extension Points

This diagram shows the extension points in a Kubernetes system.

- Users often interact with the Kubernetes API using

kubectl. Kubectl plugins extend the kubectl binary. They only affect the individual user's local environment, and so cannot enforce site-wide policies. - The apiserver handles all requests. Several types of extension points in the apiserver allow authenticating requests, or blocking them based on their content, editing content, and handling deletion. These are described in the API Access Extensions section.

- The apiserver serves various kinds of resources. Built-in resource kinds, like

pods, are defined by the Kubernetes project and can't be changed. You can also add resources that you define, or that other projects have defined, called Custom Resources, as explained in the Custom Resources section. Custom Resources are often used with API Access Extensions. - The Kubernetes scheduler decides which nodes to place pods on. There are several ways to extend scheduling. These are described in the Scheduler Extensions section.

- Much of the behavior of Kubernetes is implemented by programs called Controllers which are clients of the API-Server. Controllers are often used in conjunction with Custom Resources.

- The kubelet runs on servers, and helps pods appear like virtual servers with their own IPs on the cluster network. Network Plugins allow for different implementations of pod networking.

- The kubelet also mounts and unmounts volumes for containers. New types of storage can be supported via Storage Plugins.

If you are unsure where to start, this flowchart can help. Note that some solutions may involve several types of extensions.

API Extensions

User-Defined Types

Consider adding a Custom Resource to Kubernetes if you want to define new controllers, application configuration objects or other declarative APIs, and to manage them using Kubernetes tools, such as kubectl.

Do not use a Custom Resource as data storage for application, user, or monitoring data.

For more about Custom Resources, see the Custom Resources concept guide.

Combining New APIs with Automation

The combination of a custom resource API and a control loop is called the Operator pattern. The Operator pattern is used to manage specific, usually stateful, applications. These custom APIs and control loops can also be used to control other resources, such as storage or policies.

Changing Built-in Resources

When you extend the Kubernetes API by adding custom resources, the added resources always fall into a new API Groups. You cannot replace or change existing API groups.

Adding an API does not directly let you affect the behavior of existing APIs (e.g. Pods), but API Access Extensions do.

API Access Extensions

When a request reaches the Kubernetes API Server, it is first Authenticated, then Authorized, then subject to various types of Admission Control. See Controlling Access to the Kubernetes API for more on this flow.

Each of these steps offers extension points.

Kubernetes has several built-in authentication methods that it supports. It can also sit behind an authenticating proxy, and it can send a token from an Authorization header to a remote service for verification (a webhook). All of these methods are covered in the Authentication documentation.

Authentication

Authentication maps headers or certificates in all requests to a username for the client making the request.

Kubernetes provides several built-in authentication methods, and an Authentication webhook method if those don't meet your needs.

Authorization

Authorization determines whether specific users can read, write, and do other operations on API resources. It works at the level of whole resources -- it doesn't discriminate based on arbitrary object fields. If the built-in authorization options don't meet your needs, and Authorization webhook allows calling out to user-provided code to make an authorization decision.

Dynamic Admission Control

After a request is authorized, if it is a write operation, it also goes through Admission Control steps. In addition to the built-in steps, there are several extensions:

- The Image Policy webhook restricts what images can be run in containers.

- To make arbitrary admission control decisions, a general Admission webhook can be used. Admission Webhooks can reject creations or updates.

Infrastructure Extensions

Storage Plugins

Flex Volumes

allow users to mount volume types without built-in support by having the

Kubelet call a Binary Plugin to mount the volume.

Device Plugins

Device plugins allow a node to discover new Node resources (in addition to the

builtin ones like cpu and memory) via a

Device Plugin.

Network Plugins

Different networking fabrics can be supported via node-level

Network Plugins.

Scheduler Extensions

The scheduler is a special type of controller that watches pods, and assigns

pods to nodes. The default scheduler can be replaced entirely, while

continuing to use other Kubernetes components, or

multiple schedulers

can run at the same time.

This is a significant undertaking, and almost all Kubernetes users find they

do not need to modify the scheduler.

The scheduler also supports a

webhook

that permits a webhook backend (scheduler extension) to filter and prioritize

the nodes chosen for a pod.

What's next

2 - Extending the Kubernetes API

2.1 - Custom Resources

Custom resources are extensions of the Kubernetes API. This page discusses when to add a custom

resource to your Kubernetes cluster and when to use a standalone service. It describes the two

methods for adding custom resources and how to choose between them.

Custom resources

A resource is an endpoint in the Kubernetes API that stores a collection of

API objects of a certain kind; for example, the built-in pods resource contains a collection of Pod objects.

A custom resource is an extension of the Kubernetes API that is not necessarily available in a default

Kubernetes installation. It represents a customization of a particular Kubernetes installation. However,

many core Kubernetes functions are now built using custom resources, making Kubernetes more modular.

Custom resources can appear and disappear in a running cluster through dynamic registration,

and cluster admins can update custom resources independently of the cluster itself.

Once a custom resource is installed, users can create and access its objects using

kubectl, just as they do for built-in resources like

Pods.

Custom controllers

On their own, custom resources let you store and retrieve structured data.

When you combine a custom resource with a custom controller, custom resources

provide a true declarative API.

A declarative API

allows you to declare or specify the desired state of your resource and tries to

keep the current state of Kubernetes objects in sync with the desired state.

The controller interprets the structured data as a record of the user's

desired state, and continually maintains this state.

You can deploy and update a custom controller on a running cluster, independently

of the cluster's lifecycle. Custom controllers can work with any kind of resource,

but they are especially effective when combined with custom resources. The

Operator pattern combines custom

resources and custom controllers. You can use custom controllers to encode domain knowledge

for specific applications into an extension of the Kubernetes API.

Should I add a custom resource to my Kubernetes Cluster?

When creating a new API, consider whether to

aggregate your API with the Kubernetes cluster APIs

or let your API stand alone.

| Consider API aggregation if: | Prefer a stand-alone API if: |

|---|

| Your API is Declarative. | Your API does not fit the Declarative model. |

You want your new types to be readable and writable using kubectl. | kubectl support is not required |

| You want to view your new types in a Kubernetes UI, such as dashboard, alongside built-in types. | Kubernetes UI support is not required. |

| You are developing a new API. | You already have a program that serves your API and works well. |

| You are willing to accept the format restriction that Kubernetes puts on REST resource paths, such as API Groups and Namespaces. (See the API Overview.) | You need to have specific REST paths to be compatible with an already defined REST API. |

| Your resources are naturally scoped to a cluster or namespaces of a cluster. | Cluster or namespace scoped resources are a poor fit; you need control over the specifics of resource paths. |

| You want to reuse Kubernetes API support features. | You don't need those features. |

Declarative APIs

In a Declarative API, typically:

- Your API consists of a relatively small number of relatively small objects (resources).

- The objects define configuration of applications or infrastructure.

- The objects are updated relatively infrequently.

- Humans often need to read and write the objects.

- The main operations on the objects are CRUD-y (creating, reading, updating and deleting).

- Transactions across objects are not required: the API represents a desired state, not an exact state.

Imperative APIs are not declarative.

Signs that your API might not be declarative include:

- The client says "do this", and then gets a synchronous response back when it is done.

- The client says "do this", and then gets an operation ID back, and has to check a separate Operation object to determine completion of the request.

- You talk about Remote Procedure Calls (RPCs).

- Directly storing large amounts of data; for example, > a few kB per object, or > 1000s of objects.

- High bandwidth access (10s of requests per second sustained) needed.

- Store end-user data (such as images, PII, etc.) or other large-scale data processed by applications.

- The natural operations on the objects are not CRUD-y.

- The API is not easily modeled as objects.

- You chose to represent pending operations with an operation ID or an operation object.

Should I use a configMap or a custom resource?

Use a ConfigMap if any of the following apply:

- There is an existing, well-documented config file format, such as a

mysql.cnf or pom.xml. - You want to put the entire config file into one key of a configMap.

- The main use of the config file is for a program running in a Pod on your cluster to consume the file to configure itself.

- Consumers of the file prefer to consume via file in a Pod or environment variable in a pod, rather than the Kubernetes API.

- You want to perform rolling updates via Deployment, etc., when the file is updated.

Note: Use a

secret for sensitive data, which is similar to a configMap but more secure.

Use a custom resource (CRD or Aggregated API) if most of the following apply:

- You want to use Kubernetes client libraries and CLIs to create and update the new resource.

- You want top-level support from

kubectl; for example, kubectl get my-object object-name. - You want to build new automation that watches for updates on the new object, and then CRUD other objects, or vice versa.

- You want to write automation that handles updates to the object.

- You want to use Kubernetes API conventions like

.spec, .status, and .metadata. - You want the object to be an abstraction over a collection of controlled resources, or a summarization of other resources.

Adding custom resources

Kubernetes provides two ways to add custom resources to your cluster:

- CRDs are simple and can be created without any programming.

- API Aggregation requires programming, but allows more control over API behaviors like how data is stored and conversion between API versions.

Kubernetes provides these two options to meet the needs of different users, so that neither ease of use nor flexibility is compromised.

Aggregated APIs are subordinate API servers that sit behind the primary API server, which acts as a proxy. This arrangement is called API Aggregation (AA). To users, the Kubernetes API appears extended.

CRDs allow users to create new types of resources without adding another API server. You do not need to understand API Aggregation to use CRDs.

Regardless of how they are installed, the new resources are referred to as Custom Resources to distinguish them from built-in Kubernetes resources (like pods).

CustomResourceDefinitions

The CustomResourceDefinition

API resource allows you to define custom resources.

Defining a CRD object creates a new custom resource with a name and schema that you specify.

The Kubernetes API serves and handles the storage of your custom resource.

The name of a CRD object must be a valid

DNS subdomain name.

This frees you from writing your own API server to handle the custom resource,

but the generic nature of the implementation means you have less flexibility than with

API server aggregation.

Refer to the custom controller example

for an example of how to register a new custom resource, work with instances of your new resource type,

and use a controller to handle events.

API server aggregation

Usually, each resource in the Kubernetes API requires code that handles REST requests and manages persistent storage of objects. The main Kubernetes API server handles built-in resources like pods and services, and can also generically handle custom resources through CRDs.

The aggregation layer allows you to provide specialized

implementations for your custom resources by writing and deploying your own standalone API server.

The main API server delegates requests to you for the custom resources that you handle,

making them available to all of its clients.

Choosing a method for adding custom resources

CRDs are easier to use. Aggregated APIs are more flexible. Choose the method that best meets your needs.

Typically, CRDs are a good fit if:

- You have a handful of fields

- You are using the resource within your company, or as part of a small open-source project (as opposed to a commercial product)

Comparing ease of use

CRDs are easier to create than Aggregated APIs.

| CRDs | Aggregated API |

|---|

| Do not require programming. Users can choose any language for a CRD controller. | Requires programming in Go and building binary and image. |

| No additional service to run; CRDs are handled by API server. | An additional service to create and that could fail. |

| No ongoing support once the CRD is created. Any bug fixes are picked up as part of normal Kubernetes Master upgrades. | May need to periodically pickup bug fixes from upstream and rebuild and update the Aggregated API server. |

| No need to handle multiple versions of your API; for example, when you control the client for this resource, you can upgrade it in sync with the API. | You need to handle multiple versions of your API; for example, when developing an extension to share with the world. |

Advanced features and flexibility

Aggregated APIs offer more advanced API features and customization of other features; for example, the storage layer.

| Feature | Description | CRDs | Aggregated API |

|---|

| Validation | Help users prevent errors and allow you to evolve your API independently of your clients. These features are most useful when there are many clients who can't all update at the same time. | Yes. Most validation can be specified in the CRD using OpenAPI v3.0 validation. Any other validations supported by addition of a Validating Webhook. | Yes, arbitrary validation checks |

| Defaulting | See above | Yes, either via OpenAPI v3.0 validation default keyword (GA in 1.17), or via a Mutating Webhook (though this will not be run when reading from etcd for old objects). | Yes |

| Multi-versioning | Allows serving the same object through two API versions. Can help ease API changes like renaming fields. Less important if you control your client versions. | Yes | Yes |

| Custom Storage | If you need storage with a different performance mode (for example, a time-series database instead of key-value store) or isolation for security (for example, encryption of sensitive information, etc.) | No | Yes |

| Custom Business Logic | Perform arbitrary checks or actions when creating, reading, updating or deleting an object | Yes, using Webhooks. | Yes |

| Scale Subresource | Allows systems like HorizontalPodAutoscaler and PodDisruptionBudget interact with your new resource | Yes | Yes |

| Status Subresource | Allows fine-grained access control where user writes the spec section and the controller writes the status section. Allows incrementing object Generation on custom resource data mutation (requires separate spec and status sections in the resource) | Yes | Yes |

| Other Subresources | Add operations other than CRUD, such as "logs" or "exec". | No | Yes |

| strategic-merge-patch | The new endpoints support PATCH with Content-Type: application/strategic-merge-patch+json. Useful for updating objects that may be modified both locally, and by the server. For more information, see "Update API Objects in Place Using kubectl patch" | No | Yes |

| Protocol Buffers | The new resource supports clients that want to use Protocol Buffers | No | Yes |

| OpenAPI Schema | Is there an OpenAPI (swagger) schema for the types that can be dynamically fetched from the server? Is the user protected from misspelling field names by ensuring only allowed fields are set? Are types enforced (in other words, don't put an int in a string field?) | Yes, based on the OpenAPI v3.0 validation schema (GA in 1.16). | Yes |

Common Features

When you create a custom resource, either via a CRD or an AA, you get many features for your API, compared to implementing it outside the Kubernetes platform:

| Feature | What it does |

|---|

| CRUD | The new endpoints support CRUD basic operations via HTTP and kubectl |

| Watch | The new endpoints support Kubernetes Watch operations via HTTP |

| Discovery | Clients like kubectl and dashboard automatically offer list, display, and field edit operations on your resources |

| json-patch | The new endpoints support PATCH with Content-Type: application/json-patch+json |

| merge-patch | The new endpoints support PATCH with Content-Type: application/merge-patch+json |

| HTTPS | The new endpoints uses HTTPS |

| Built-in Authentication | Access to the extension uses the core API server (aggregation layer) for authentication |

| Built-in Authorization | Access to the extension can reuse the authorization used by the core API server; for example, RBAC. |

| Finalizers | Block deletion of extension resources until external cleanup happens. |

| Admission Webhooks | Set default values and validate extension resources during any create/update/delete operation. |

| UI/CLI Display | Kubectl, dashboard can display extension resources. |

| Unset versus Empty | Clients can distinguish unset fields from zero-valued fields. |

| Client Libraries Generation | Kubernetes provides generic client libraries, as well as tools to generate type-specific client libraries. |

| Labels and annotations | Common metadata across objects that tools know how to edit for core and custom resources. |

Preparing to install a custom resource

There are several points to be aware of before adding a custom resource to your cluster.

Third party code and new points of failure

While creating a CRD does not automatically add any new points of failure (for example, by causing third party code to run on your API server), packages (for example, Charts) or other installation bundles often include CRDs as well as a Deployment of third-party code that implements the business logic for a new custom resource.

Installing an Aggregated API server always involves running a new Deployment.

Storage

Custom resources consume storage space in the same way that ConfigMaps do. Creating too many custom resources may overload your API server's storage space.

Aggregated API servers may use the same storage as the main API server, in which case the same warning applies.

Authentication, authorization, and auditing

CRDs always use the same authentication, authorization, and audit logging as the built-in resources of your API server.

If you use RBAC for authorization, most RBAC roles will not grant access to the new resources (except the cluster-admin role or any role created with wildcard rules). You'll need to explicitly grant access to the new resources. CRDs and Aggregated APIs often come bundled with new role definitions for the types they add.

Aggregated API servers may or may not use the same authentication, authorization, and auditing as the primary API server.

Accessing a custom resource

Kubernetes client libraries can be used to access custom resources. Not all client libraries support custom resources. The Go and Python client libraries do.

When you add a custom resource, you can access it using:

kubectl- The kubernetes dynamic client.

- A REST client that you write.

- A client generated using Kubernetes client generation tools (generating one is an advanced undertaking, but some projects may provide a client along with the CRD or AA).

What's next

2.2 - Extending the Kubernetes API with the aggregation layer

The aggregation layer allows Kubernetes to be extended with additional APIs, beyond what is offered by the core Kubernetes APIs.

The additional APIs can either be ready-made solutions such as service-catalog, or APIs that you develop yourself.

The aggregation layer is different from Custom Resources, which are a way to make the kube-apiserver recognise new kinds of object.

Aggregation layer

The aggregation layer runs in-process with the kube-apiserver. Until an extension resource is registered, the aggregation layer will do nothing. To register an API, you add an APIService object, which "claims" the URL path in the Kubernetes API. At that point, the aggregation layer will proxy anything sent to that API path (e.g. /apis/myextension.mycompany.io/v1/…) to the registered APIService.

The most common way to implement the APIService is to run an extension API server in Pod(s) that run in your cluster. If you're using the extension API server to manage resources in your cluster, the extension API server (also written as "extension-apiserver") is typically paired with one or more controllers. The apiserver-builder library provides a skeleton for both extension API servers and the associated controller(s).

Response latency

Extension API servers should have low latency networking to and from the kube-apiserver.

Discovery requests are required to round-trip from the kube-apiserver in five seconds or less.

If your extension API server cannot achieve that latency requirement, consider making changes that let you meet it.

What's next

3 - Compute, Storage, and Networking Extensions

3.1 - Network Plugins

Network plugins in Kubernetes come in a few flavors:

- CNI plugins: adhere to the Container Network Interface (CNI) specification, designed for interoperability.

- Kubernetes follows the v0.4.0 release of the CNI specification.

- Kubenet plugin: implements basic

cbr0 using the bridge and host-local CNI plugins

Installation

The kubelet has a single default network plugin, and a default network common to the entire cluster. It probes for plugins when it starts up, remembers what it finds, and executes the selected plugin at appropriate times in the pod lifecycle (this is only true for Docker, as CRI manages its own CNI plugins). There are two Kubelet command line parameters to keep in mind when using plugins:

cni-bin-dir: Kubelet probes this directory for plugins on startupnetwork-plugin: The network plugin to use from cni-bin-dir. It must match the name reported by a plugin probed from the plugin directory. For CNI plugins, this is cni.

Network Plugin Requirements

Besides providing the NetworkPlugin interface to configure and clean up pod networking, the plugin may also need specific support for kube-proxy. The iptables proxy obviously depends on iptables, and the plugin may need to ensure that container traffic is made available to iptables. For example, if the plugin connects containers to a Linux bridge, the plugin must set the net/bridge/bridge-nf-call-iptables sysctl to 1 to ensure that the iptables proxy functions correctly. If the plugin does not use a Linux bridge (but instead something like Open vSwitch or some other mechanism) it should ensure container traffic is appropriately routed for the proxy.

By default if no kubelet network plugin is specified, the noop plugin is used, which sets net/bridge/bridge-nf-call-iptables=1 to ensure simple configurations (like Docker with a bridge) work correctly with the iptables proxy.

CNI

The CNI plugin is selected by passing Kubelet the --network-plugin=cni command-line option. Kubelet reads a file from --cni-conf-dir (default /etc/cni/net.d) and uses the CNI configuration from that file to set up each pod's network. The CNI configuration file must match the CNI specification, and any required CNI plugins referenced by the configuration must be present in --cni-bin-dir (default /opt/cni/bin).

If there are multiple CNI configuration files in the directory, the kubelet uses the configuration file that comes first by name in lexicographic order.

In addition to the CNI plugin specified by the configuration file, Kubernetes requires the standard CNI lo plugin, at minimum version 0.2.0

Support hostPort

The CNI networking plugin supports hostPort. You can use the official portmap

plugin offered by the CNI plugin team or use your own plugin with portMapping functionality.

If you want to enable hostPort support, you must specify portMappings capability in your cni-conf-dir.

For example:

{

"name": "k8s-pod-network",

"cniVersion": "0.3.0",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "127.0.0.1",

"ipam": {

"type": "host-local",

"subnet": "usePodCidr"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

},

{

"type": "portmap",

"capabilities": {"portMappings": true}

}

]

}

Support traffic shaping

Experimental Feature

The CNI networking plugin also supports pod ingress and egress traffic shaping. You can use the official bandwidth

plugin offered by the CNI plugin team or use your own plugin with bandwidth control functionality.

If you want to enable traffic shaping support, you must add the bandwidth plugin to your CNI configuration file

(default /etc/cni/net.d) and ensure that the binary is included in your CNI bin dir (default /opt/cni/bin).

{

"name": "k8s-pod-network",

"cniVersion": "0.3.0",

"plugins": [

{

"type": "calico",

"log_level": "info",

"datastore_type": "kubernetes",

"nodename": "127.0.0.1",

"ipam": {

"type": "host-local",

"subnet": "usePodCidr"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

},

{

"type": "bandwidth",

"capabilities": {"bandwidth": true}

}

]

}

Now you can add the kubernetes.io/ingress-bandwidth and kubernetes.io/egress-bandwidth annotations to your pod.

For example:

apiVersion: v1

kind: Pod

metadata:

annotations:

kubernetes.io/ingress-bandwidth: 1M

kubernetes.io/egress-bandwidth: 1M

...

kubenet

Kubenet is a very basic, simple network plugin, on Linux only. It does not, of itself, implement more advanced features like cross-node networking or network policy. It is typically used together with a cloud provider that sets up routing rules for communication between nodes, or in single-node environments.

Kubenet creates a Linux bridge named cbr0 and creates a veth pair for each pod with the host end of each pair connected to cbr0. The pod end of the pair is assigned an IP address allocated from a range assigned to the node either through configuration or by the controller-manager. cbr0 is assigned an MTU matching the smallest MTU of an enabled normal interface on the host.

The plugin requires a few things:

- The standard CNI

bridge, lo and host-local plugins are required, at minimum version 0.2.0. Kubenet will first search for them in /opt/cni/bin. Specify cni-bin-dir to supply additional search path. The first found match will take effect. - Kubelet must be run with the

--network-plugin=kubenet argument to enable the plugin - Kubelet should also be run with the

--non-masquerade-cidr=<clusterCidr> argument to ensure traffic to IPs outside this range will use IP masquerade. - The node must be assigned an IP subnet through either the

--pod-cidr kubelet command-line option or the --allocate-node-cidrs=true --cluster-cidr=<cidr> controller-manager command-line options.

Customizing the MTU (with kubenet)

The MTU should always be configured correctly to get the best networking performance. Network plugins will usually try

to infer a sensible MTU, but sometimes the logic will not result in an optimal MTU. For example, if the

Docker bridge or another interface has a small MTU, kubenet will currently select that MTU. Or if you are

using IPSEC encapsulation, the MTU must be reduced, and this calculation is out-of-scope for

most network plugins.

Where needed, you can specify the MTU explicitly with the network-plugin-mtu kubelet option. For example,

on AWS the eth0 MTU is typically 9001, so you might specify --network-plugin-mtu=9001. If you're using IPSEC you

might reduce it to allow for encapsulation overhead; for example: --network-plugin-mtu=8873.

This option is provided to the network-plugin; currently only kubenet supports network-plugin-mtu.

Usage Summary

--network-plugin=cni specifies that we use the cni network plugin with actual CNI plugin binaries located in --cni-bin-dir (default /opt/cni/bin) and CNI plugin configuration located in --cni-conf-dir (default /etc/cni/net.d).--network-plugin=kubenet specifies that we use the kubenet network plugin with CNI bridge, lo and host-local plugins placed in /opt/cni/bin or cni-bin-dir.--network-plugin-mtu=9001 specifies the MTU to use, currently only used by the kubenet network plugin.

What's next

3.2 - Device Plugins

Use the Kubernetes device plugin framework to implement plugins for GPUs, NICs, FPGAs, InfiniBand, and similar resources that require vendor-specific setup.

FEATURE STATE: Kubernetes v1.10 [beta]

Kubernetes provides a device plugin framework

that you can use to advertise system hardware resources to the

Kubelet.

Instead of customizing the code for Kubernetes itself, vendors can implement a

device plugin that you deploy either manually or as a DaemonSet.

The targeted devices include GPUs, high-performance NICs, FPGAs, InfiniBand adapters,

and other similar computing resources that may require vendor specific initialization

and setup.

Device plugin registration

The kubelet exports a Registration gRPC service:

service Registration {

rpc Register(RegisterRequest) returns (Empty) {}

}

A device plugin can register itself with the kubelet through this gRPC service.

During the registration, the device plugin needs to send:

- The name of its Unix socket.

- The Device Plugin API version against which it was built.

- The

ResourceName it wants to advertise. Here ResourceName needs to follow the

extended resource naming scheme

as vendor-domain/resourcetype.

(For example, an NVIDIA GPU is advertised as nvidia.com/gpu.)

Following a successful registration, the device plugin sends the kubelet the

list of devices it manages, and the kubelet is then in charge of advertising those

resources to the API server as part of the kubelet node status update.

For example, after a device plugin registers hardware-vendor.example/foo with the kubelet

and reports two healthy devices on a node, the node status is updated

to advertise that the node has 2 "Foo" devices installed and available.

Then, users can request devices in a

Container

specification as they request other types of resources, with the following limitations:

- Extended resources are only supported as integer resources and cannot be overcommitted.

- Devices cannot be shared among Containers.

Suppose a Kubernetes cluster is running a device plugin that advertises resource hardware-vendor.example/foo

on certain nodes. Here is an example of a pod requesting this resource to run a demo workload:

---

apiVersion: v1

kind: Pod

metadata:

name: demo-pod

spec:

containers:

- name: demo-container-1

image: k8s.gcr.io/pause:2.0

resources:

limits:

hardware-vendor.example/foo: 2

#

# This Pod needs 2 of the hardware-vendor.example/foo devices

# and can only schedule onto a Node that's able to satisfy

# that need.

#

# If the Node has more than 2 of those devices available, the

# remainder would be available for other Pods to use.

Device plugin implementation

The general workflow of a device plugin includes the following steps:

Initialization. During this phase, the device plugin performs vendor specific

initialization and setup to make sure the devices are in a ready state.

The plugin starts a gRPC service, with a Unix socket under host path

/var/lib/kubelet/device-plugins/, that implements the following interfaces:

service DevicePlugin {

// GetDevicePluginOptions returns options to be communicated with Device Manager.

rpc GetDevicePluginOptions(Empty) returns (DevicePluginOptions) {}

// ListAndWatch returns a stream of List of Devices

// Whenever a Device state change or a Device disappears, ListAndWatch

// returns the new list

rpc ListAndWatch(Empty) returns (stream ListAndWatchResponse) {}

// Allocate is called during container creation so that the Device

// Plugin can run device specific operations and instruct Kubelet

// of the steps to make the Device available in the container

rpc Allocate(AllocateRequest) returns (AllocateResponse) {}

// GetPreferredAllocation returns a preferred set of devices to allocate

// from a list of available ones. The resulting preferred allocation is not

// guaranteed to be the allocation ultimately performed by the

// devicemanager. It is only designed to help the devicemanager make a more

// informed allocation decision when possible.

rpc GetPreferredAllocation(PreferredAllocationRequest) returns (PreferredAllocationResponse) {}

// PreStartContainer is called, if indicated by Device Plugin during registeration phase,

// before each container start. Device plugin can run device specific operations

// such as resetting the device before making devices available to the container.

rpc PreStartContainer(PreStartContainerRequest) returns (PreStartContainerResponse) {}

}

Note: Plugins are not required to provide useful implementations for

GetPreferredAllocation() or PreStartContainer(). Flags indicating which

(if any) of these calls are available should be set in the DevicePluginOptions

message sent back by a call to GetDevicePluginOptions(). The kubelet will

always call GetDevicePluginOptions() to see which optional functions are

available, before calling any of them directly.

The plugin registers itself with the kubelet through the Unix socket at host

path /var/lib/kubelet/device-plugins/kubelet.sock.

After successfully registering itself, the device plugin runs in serving mode, during which it keeps

monitoring device health and reports back to the kubelet upon any device state changes.

It is also responsible for serving Allocate gRPC requests. During Allocate, the device plugin may

do device-specific preparation; for example, GPU cleanup or QRNG initialization.

If the operations succeed, the device plugin returns an AllocateResponse that contains container

runtime configurations for accessing the allocated devices. The kubelet passes this information

to the container runtime.

Handling kubelet restarts

A device plugin is expected to detect kubelet restarts and re-register itself with the new

kubelet instance. In the current implementation, a new kubelet instance deletes all the existing Unix sockets

under /var/lib/kubelet/device-plugins when it starts. A device plugin can monitor the deletion

of its Unix socket and re-register itself upon such an event.

Device plugin deployment

You can deploy a device plugin as a DaemonSet, as a package for your node's operating system,

or manually.

The canonical directory /var/lib/kubelet/device-plugins requires privileged access,

so a device plugin must run in a privileged security context.

If you're deploying a device plugin as a DaemonSet, /var/lib/kubelet/device-plugins

must be mounted as a Volume

in the plugin's

PodSpec.

If you choose the DaemonSet approach you can rely on Kubernetes to: place the device plugin's

Pod onto Nodes, to restart the daemon Pod after failure, and to help automate upgrades.

API compatibility

Kubernetes device plugin support is in beta. The API may change before stabilization,

in incompatible ways. As a project, Kubernetes recommends that device plugin developers:

- Watch for changes in future releases.

- Support multiple versions of the device plugin API for backward/forward compatibility.

If you enable the DevicePlugins feature and run device plugins on nodes that need to be upgraded to

a Kubernetes release with a newer device plugin API version, upgrade your device plugins

to support both versions before upgrading these nodes. Taking that approach will

ensure the continuous functioning of the device allocations during the upgrade.

Monitoring Device Plugin Resources

FEATURE STATE: Kubernetes v1.15 [beta]

In order to monitor resources provided by device plugins, monitoring agents need to be able to

discover the set of devices that are in-use on the node and obtain metadata to describe which

container the metric should be associated with. Prometheus metrics

exposed by device monitoring agents should follow the

Kubernetes Instrumentation Guidelines,

identifying containers using pod, namespace, and container prometheus labels.

The kubelet provides a gRPC service to enable discovery of in-use devices, and to provide metadata

for these devices:

// PodResourcesLister is a service provided by the kubelet that provides information about the

// node resources consumed by pods and containers on the node

service PodResourcesLister {

rpc List(ListPodResourcesRequest) returns (ListPodResourcesResponse) {}

}

The gRPC service is served over a unix socket at /var/lib/kubelet/pod-resources/kubelet.sock.

Monitoring agents for device plugin resources can be deployed as a daemon, or as a DaemonSet.

The canonical directory /var/lib/kubelet/pod-resources requires privileged access, so monitoring

agents must run in a privileged security context. If a device monitoring agent is running as a

DaemonSet, /var/lib/kubelet/pod-resources must be mounted as a

Volume in the device monitoring agent's

PodSpec.

Support for the "PodResources service" requires KubeletPodResources feature gate to be enabled.

It is enabled by default starting with Kubernetes 1.15 and is v1 since Kubernetes 1.20.

Device Plugin integration with the Topology Manager

FEATURE STATE: Kubernetes v1.18 [beta]

The Topology Manager is a Kubelet component that allows resources to be co-ordinated in a Topology aligned manner. In order to do this, the Device Plugin API was extended to include a TopologyInfo struct.

message TopologyInfo {

repeated NUMANode nodes = 1;

}

message NUMANode {

int64 ID = 1;

}

Device Plugins that wish to leverage the Topology Manager can send back a populated TopologyInfo struct as part of the device registration, along with the device IDs and the health of the device. The device manager will then use this information to consult with the Topology Manager and make resource assignment decisions.

TopologyInfo supports a nodes field that is either nil (the default) or a list of NUMA nodes. This lets the Device Plugin publish that can span NUMA nodes.

An example TopologyInfo struct populated for a device by a Device Plugin:

pluginapi.Device{ID: "25102017", Health: pluginapi.Healthy, Topology:&pluginapi.TopologyInfo{Nodes: []*pluginapi.NUMANode{&pluginapi.NUMANode{ID: 0,},}}}

Device plugin examples

Here are some examples of device plugin implementations:

What's next

4 - Operator pattern

Operators are software extensions to Kubernetes that make use of

custom resources

to manage applications and their components. Operators follow

Kubernetes principles, notably the control loop.

Motivation

The Operator pattern aims to capture the key aim of a human operator who

is managing a service or set of services. Human operators who look after

specific applications and services have deep knowledge of how the system

ought to behave, how to deploy it, and how to react if there are problems.

People who run workloads on Kubernetes often like to use automation to take

care of repeatable tasks. The Operator pattern captures how you can write

code to automate a task beyond what Kubernetes itself provides.

Operators in Kubernetes

Kubernetes is designed for automation. Out of the box, you get lots of

built-in automation from the core of Kubernetes. You can use Kubernetes

to automate deploying and running workloads, and you can automate how

Kubernetes does that.

Kubernetes' controllers

concept lets you extend the cluster's behaviour without modifying the code

of Kubernetes itself.

Operators are clients of the Kubernetes API that act as controllers for

a Custom Resource.

An example Operator

Some of the things that you can use an operator to automate include:

- deploying an application on demand

- taking and restoring backups of that application's state

- handling upgrades of the application code alongside related changes such

as database schemas or extra configuration settings

- publishing a Service to applications that don't support Kubernetes APIs to

discover them

- simulating failure in all or part of your cluster to test its resilience

- choosing a leader for a distributed application without an internal

member election process

What might an Operator look like in more detail? Here's an example in more

detail:

- A custom resource named SampleDB, that you can configure into the cluster.

- A Deployment that makes sure a Pod is running that contains the

controller part of the operator.

- A container image of the operator code.

- Controller code that queries the control plane to find out what SampleDB

resources are configured.

- The core of the Operator is code to tell the API server how to make

reality match the configured resources.

- If you add a new SampleDB, the operator sets up PersistentVolumeClaims

to provide durable database storage, a StatefulSet to run SampleDB and

a Job to handle initial configuration.

- If you delete it, the Operator takes a snapshot, then makes sure that

the StatefulSet and Volumes are also removed.

- The operator also manages regular database backups. For each SampleDB

resource, the operator determines when to create a Pod that can connect

to the database and take backups. These Pods would rely on a ConfigMap

and / or a Secret that has database connection details and credentials.

- Because the Operator aims to provide robust automation for the resource

it manages, there would be additional supporting code. For this example,

code checks to see if the database is running an old version and, if so,

creates Job objects that upgrade it for you.

Deploying Operators

The most common way to deploy an Operator is to add the

Custom Resource Definition and its associated Controller to your cluster.

The Controller will normally run outside of the

control plane,

much as you would run any containerized application.

For example, you can run the controller in your cluster as a Deployment.

Using an Operator

Once you have an Operator deployed, you'd use it by adding, modifying or

deleting the kind of resource that the Operator uses. Following the above

example, you would set up a Deployment for the Operator itself, and then:

kubectl get SampleDB # find configured databases

kubectl edit SampleDB/example-database # manually change some settings

…and that's it! The Operator will take care of applying the changes

as well as keeping the existing service in good shape.

Writing your own Operator

If there isn't an Operator in the ecosystem that implements the behavior you

want, you can code your own.

You also implement an Operator (that is, a Controller) using any language / runtime

that can act as a client for the Kubernetes API.

Following are a few libraries and tools you can use to write your own cloud native

Operator.

Caution:

This section links to third party projects that provide functionality required by Kubernetes. The Kubernetes project authors aren't responsible for these projects. This page follows CNCF website guidelines by listing projects alphabetically. To add a project to this list, read the content guide before submitting a change.

What's next

- Learn more about Custom Resources

- Find ready-made operators on OperatorHub.io to suit your use case

- Publish your operator for other people to use

- Read CoreOS' original article that introduced the Operator pattern (this is an archived version of the original article).

- Read an article from Google Cloud about best practices for building Operators

5 - Service Catalog

Service Catalog is an extension API that enables applications running in Kubernetes clusters to easily use external managed software offerings, such as a datastore service offered by a cloud provider.

It provides a way to list, provision, and bind with external Managed Services from Service Brokers without needing detailed knowledge about how those services are created or managed.

A service broker, as defined by the Open service broker API spec, is an endpoint for a set of managed services offered and maintained by a third-party, which could be a cloud provider such as AWS, GCP, or Azure.

Some examples of managed services are Microsoft Azure Cloud Queue, Amazon Simple Queue Service, and Google Cloud Pub/Sub, but they can be any software offering that can be used by an application.

Using Service Catalog, a cluster operator can browse the list of managed services offered by a service broker, provision an instance of a managed service, and bind with it to make it available to an application in the Kubernetes cluster.

Example use case

An application developer wants to use message queuing as part of their application running in a Kubernetes cluster.

However, they do not want to deal with the overhead of setting such a service up and administering it themselves.

Fortunately, there is a cloud provider that offers message queuing as a managed service through its service broker.

A cluster operator can setup Service Catalog and use it to communicate with the cloud provider's service broker to provision an instance of the message queuing service and make it available to the application within the Kubernetes cluster.

The application developer therefore does not need to be concerned with the implementation details or management of the message queue.

The application can access the message queue as a service.

Architecture

Service Catalog uses the Open service broker API to communicate with service brokers, acting as an intermediary for the Kubernetes API Server to negotiate the initial provisioning and retrieve the credentials necessary for the application to use a managed service.

It is implemented as an extension API server and a controller, using etcd for storage. It also uses the aggregation layer available in Kubernetes 1.7+ to present its API.

API Resources

Service Catalog installs the servicecatalog.k8s.io API and provides the following Kubernetes resources:

ClusterServiceBroker: An in-cluster representation of a service broker, encapsulating its server connection details.

These are created and managed by cluster operators who wish to use that broker server to make new types of managed services available within their cluster.ClusterServiceClass: A managed service offered by a particular service broker.

When a new ClusterServiceBroker resource is added to the cluster, the Service Catalog controller connects to the service broker to obtain a list of available managed services. It then creates a new ClusterServiceClass resource corresponding to each managed service.ClusterServicePlan: A specific offering of a managed service. For example, a managed service may have different plans available, such as a free tier or paid tier, or it may have different configuration options, such as using SSD storage or having more resources. Similar to ClusterServiceClass, when a new ClusterServiceBroker is added to the cluster, Service Catalog creates a new ClusterServicePlan resource corresponding to each Service Plan available for each managed service.ServiceInstance: A provisioned instance of a ClusterServiceClass.

These are created by cluster operators to make a specific instance of a managed service available for use by one or more in-cluster applications.

When a new ServiceInstance resource is created, the Service Catalog controller connects to the appropriate service broker and instruct it to provision the service instance.ServiceBinding: Access credentials to a ServiceInstance.

These are created by cluster operators who want their applications to make use of a ServiceInstance.

Upon creation, the Service Catalog controller creates a Kubernetes Secret containing connection details and credentials for the Service Instance, which can be mounted into Pods.

Authentication

Service Catalog supports these methods of authentication:

Usage

A cluster operator can use Service Catalog API Resources to provision managed services and make them available within a Kubernetes cluster. The steps involved are:

- Listing the managed services and Service Plans available from a service broker.

- Provisioning a new instance of the managed service.

- Binding to the managed service, which returns the connection credentials.

- Mapping the connection credentials into the application.

Listing managed services and Service Plans

First, a cluster operator must create a ClusterServiceBroker resource within the servicecatalog.k8s.io group. This resource contains the URL and connection details necessary to access a service broker endpoint.

This is an example of a ClusterServiceBroker resource:

apiVersion: servicecatalog.k8s.io/v1beta1

kind: ClusterServiceBroker

metadata:

name: cloud-broker

spec:

# Points to the endpoint of a service broker. (This example is not a working URL.)

url: https://servicebroker.somecloudprovider.com/v1alpha1/projects/service-catalog/brokers/default

#####

# Additional values can be added here, which may be used to communicate

# with the service broker, such as bearer token info or a caBundle for TLS.

#####

The following is a sequence diagram illustrating the steps involved in listing managed services and Plans available from a service broker:

Once the ClusterServiceBroker resource is added to Service Catalog, it triggers a call to the external service broker for a list of available services.

The service broker returns a list of available managed services and a list of Service Plans, which are cached locally as ClusterServiceClass and ClusterServicePlan resources respectively.

A cluster operator can then get the list of available managed services using the following command:

kubectl get clusterserviceclasses -o=custom-columns=SERVICE\ NAME:.metadata.name,EXTERNAL\ NAME:.spec.externalName

It should output a list of service names with a format similar to:

SERVICE NAME EXTERNAL NAME

4f6e6cf6-ffdd-425f-a2c7-3c9258ad2468 cloud-provider-service

... ...

They can also view the Service Plans available using the following command:

kubectl get clusterserviceplans -o=custom-columns=PLAN\ NAME:.metadata.name,EXTERNAL\ NAME:.spec.externalName

It should output a list of plan names with a format similar to:

PLAN NAME EXTERNAL NAME

86064792-7ea2-467b-af93-ac9694d96d52 service-plan-name

... ...

Provisioning a new instance

A cluster operator can initiate the provisioning of a new instance by creating a ServiceInstance resource.

This is an example of a ServiceInstance resource:

apiVersion: servicecatalog.k8s.io/v1beta1

kind: ServiceInstance

metadata:

name: cloud-queue-instance

namespace: cloud-apps

spec:

# References one of the previously returned services

clusterServiceClassExternalName: cloud-provider-service

clusterServicePlanExternalName: service-plan-name

#####

# Additional parameters can be added here,

# which may be used by the service broker.

#####

The following sequence diagram illustrates the steps involved in provisioning a new instance of a managed service:

- When the

ServiceInstance resource is created, Service Catalog initiates a call to the external service broker to provision an instance of the service. - The service broker creates a new instance of the managed service and returns an HTTP response.

- A cluster operator can then check the status of the instance to see if it is ready.

Binding to a managed service

After a new instance has been provisioned, a cluster operator must bind to the managed service to get the connection credentials and service account details necessary for the application to use the service. This is done by creating a ServiceBinding resource.

The following is an example of a ServiceBinding resource:

apiVersion: servicecatalog.k8s.io/v1beta1

kind: ServiceBinding

metadata:

name: cloud-queue-binding

namespace: cloud-apps

spec:

instanceRef:

name: cloud-queue-instance

#####

# Additional information can be added here, such as a secretName or

# service account parameters, which may be used by the service broker.

#####

The following sequence diagram illustrates the steps involved in binding to a managed service instance:

- After the

ServiceBinding is created, Service Catalog makes a call to the external service broker requesting the information necessary to bind with the service instance. - The service broker enables the application permissions/roles for the appropriate service account.

- The service broker returns the information necessary to connect and access the managed service instance. This is provider and service-specific so the information returned may differ between Service Providers and their managed services.

Mapping the connection credentials

After binding, the final step involves mapping the connection credentials and service-specific information into the application.

These pieces of information are stored in secrets that the application in the cluster can access and use to connect directly with the managed service.

Pod configuration File

One method to perform this mapping is to use a declarative Pod configuration.

The following example describes how to map service account credentials into the application. A key called sa-key is stored in a volume named provider-cloud-key, and the application mounts this volume at /var/secrets/provider/key.json. The environment variable PROVIDER_APPLICATION_CREDENTIALS is mapped from the value of the mounted file.

...

spec:

volumes:

- name: provider-cloud-key

secret:

secretName: sa-key

containers:

...

volumeMounts:

- name: provider-cloud-key

mountPath: /var/secrets/provider

env:

- name: PROVIDER_APPLICATION_CREDENTIALS

value: "/var/secrets/provider/key.json"

The following example describes how to map secret values into application environment variables. In this example, the messaging queue topic name is mapped from a secret named provider-queue-credentials with a key named topic to the environment variable TOPIC.

...

env:

- name: "TOPIC"

valueFrom:

secretKeyRef:

name: provider-queue-credentials

key: topic

What's next