Migrating from dockershim

This section presents information you need to know when migrating from

dockershim to other container runtimes.

Since the announcement of dockershim deprecation

in Kubernetes 1.20, there were questions on how this will affect various workloads and Kubernetes

installations. You can find this blog post useful to understand the problem better: Dockershim Deprecation FAQ

It is recommended to migrate from dockershim to alternative container runtimes.

Check out container runtimes

section to know your options. Make sure to

report issues you encountered

with the migration. So the issue can be fixed in a timely manner and your cluster would be

ready for dockershim removal.

1 - Check whether Dockershim deprecation affects you

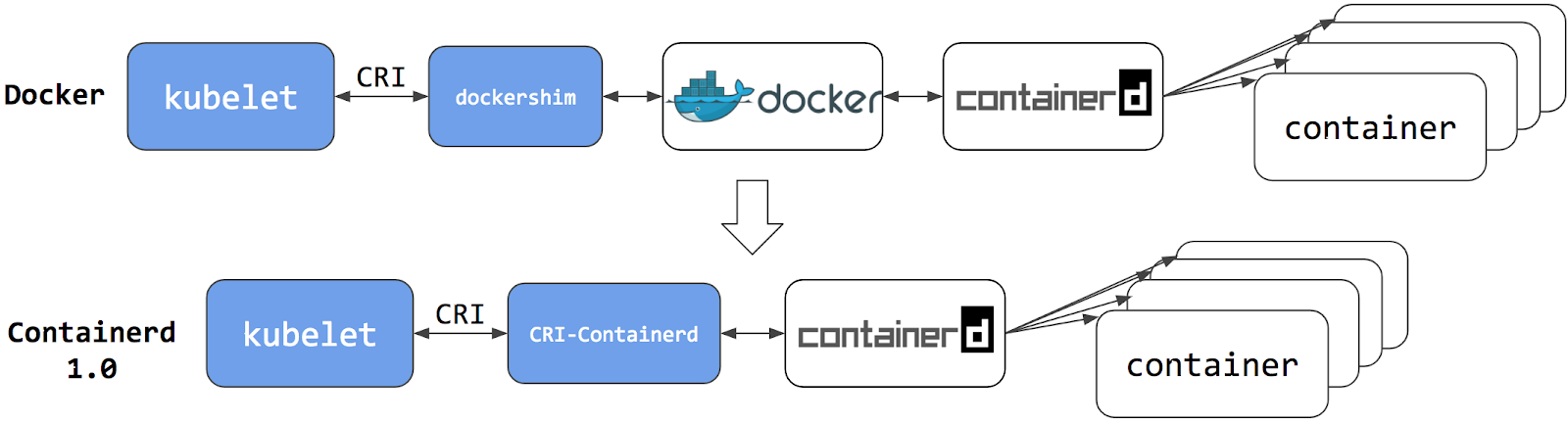

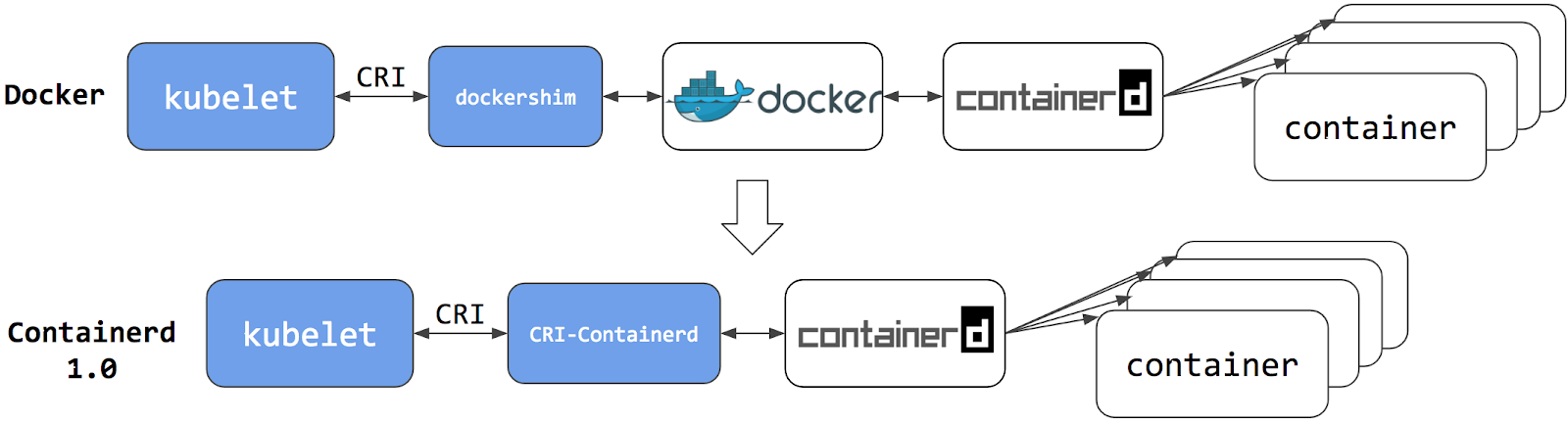

The dockershim component of Kubernetes allows to use Docker as a Kubernetes's

container runtime.

Kubernetes' built-in dockershim component was deprecated in release v1.20.

This page explains how your cluster could be using Docker as a container runtime,

provides details on the role that dockershim plays when in use, and shows steps

you can take to check whether any workloads could be affected by dockershim deprecation.

Finding if your app has a dependencies on Docker

If you are using Docker for building your application containers, you can still

run these containers on any container runtime. This use of Docker does not count

as a dependency on Docker as a container runtime.

When alternative container runtime is used, executing Docker commands may either

not work or yield unexpected output. This is how you can find whether you have a

dependency on Docker:

- Make sure no privileged Pods execute Docker commands.

- Check that scripts and apps running on nodes outside of Kubernetes

infrastructure do not execute Docker commands. It might be:

- SSH to nodes to troubleshoot;

- Node startup scripts;

- Monitoring and security agents installed on nodes directly.

- Third-party tools that perform above mentioned privileged operations. See

Migrating telemetry and security agents from dockershim

for more information.

- Make sure there is no indirect dependencies on dockershim behavior.

This is an edge case and unlikely to affect your application. Some tooling may be configured

to react to Docker-specific behaviors, for example, raise alert on specific metrics or search for

a specific log message as part of troubleshooting instructions.

If you have such tooling configured, test the behavior on test

cluster before migration.

Dependency on Docker explained

A container runtime is software that can

execute the containers that make up a Kubernetes pod. Kubernetes is responsible for orchestration

and scheduling of Pods; on each node, the kubelet

uses the container runtime interface as an abstraction so that you can use any compatible

container runtime.

In its earliest releases, Kubernetes offered compatibility with one container runtime: Docker.

Later in the Kubernetes project's history, cluster operators wanted to adopt additional container runtimes.

The CRI was designed to allow this kind of flexibility - and the kubelet began supporting CRI. However,

because Docker existed before the CRI specification was invented, the Kubernetes project created an

adapter component, dockershim. The dockershim adapter allows the kubelet to interact with Docker as

if Docker were a CRI compatible runtime.

You can read about it in Kubernetes Containerd integration goes GA blog post.

Switching to Containerd as a container runtime eliminates the middleman. All the

same containers can be run by container runtimes like Containerd as before. But

now, since containers schedule directly with the container runtime, they are not visible to Docker.

So any Docker tooling or fancy UI you might have used

before to check on these containers is no longer available.

You cannot get container information using docker ps or docker inspect

commands. As you cannot list containers, you cannot get logs, stop containers,

or execute something inside container using docker exec.

Note: If you're running workloads via Kubernetes, the best way to stop a container is through

the Kubernetes API rather than directly through the container runtime (this advice applies

for all container runtimes, not only Docker).

You can still pull images or build them using docker build command. But images

built or pulled by Docker would not be visible to container runtime and

Kubernetes. They needed to be pushed to some registry to allow them to be used

by Kubernetes.

2 - Migrating telemetry and security agents from dockershim

With Kubernetes 1.20 dockershim was deprecated. From the

Dockershim Deprecation FAQ

you might already know that most apps do not have a direct dependency on runtime hosting

containers. However, there are still a lot of telemetry and security agents

that has a dependency on docker to collect containers metadata, logs and

metrics. This document aggregates information on how to detect these

dependencies and links on how to migrate these agents to use generic tools or

alternative runtimes.

Telemetry and security agents

There are a few ways agents may run on Kubernetes cluster. Agents may run on

nodes directly or as DaemonSets.

Why do telemetry agents rely on Docker?

Historically, Kubernetes was built on top of Docker. Kubernetes is managing

networking and scheduling, Docker was placing and operating containers on a

node. So you can get scheduling-related metadata like a pod name from Kubernetes

and containers state information from Docker. Over time more runtimes were

created to manage containers. Also there are projects and Kubernetes features

that generalize container status information extraction across many runtimes.

Some agents are tied specifically to the Docker tool. The agents may run

commands like docker ps

or docker top to list

containers and processes or docker logs

to subscribe on docker logs. With the deprecating of Docker as a container runtime,

these commands will not work any longer.

Identify DaemonSets that depend on Docker

If a pod wants to make calls to the dockerd running on the node, the pod must either:

- mount the filesystem containing the Docker daemon's privileged socket, as a

volume; or

- mount the specific path of the Docker daemon's privileged socket directly, also as a volume.

For example: on COS images, Docker exposes its Unix domain socket at

/var/run/docker.sock This means that the pod spec will include a

hostPath volume mount of /var/run/docker.sock.

Here's a sample shell script to find Pods that have a mount directly mapping the

Docker socket. This script outputs the namespace and name of the pod. You can

remove the grep /var/run/docker.sock to review other mounts.

kubectl get pods --all-namespaces \

-o=jsonpath='{range .items[*]}{"\n"}{.metadata.namespace}{":\t"}{.metadata.name}{":\t"}{range .spec.volumes[*]}{.hostPath.path}{", "}{end}{end}' \

| sort \

| grep '/var/run/docker.sock'

Note: There are alternative ways for a pod to access Docker on the host. For instance, the parent

directory

/var/run may be mounted instead of the full path (like in

this

example).

The script above only detects the most common uses.

Detecting Docker dependency from node agents

In case your cluster nodes are customized and install additional security and

telemetry agents on the node, make sure to check with the vendor of the agent whether it has dependency on Docker.

Telemetry and security agent vendors

We keep the work in progress version of migration instructions for various telemetry and security agent vendors

in Google doc.

Please contact the vendor to get up to date instructions for migrating from dockershim.